A long time ago I was an active duty Marine infantryman. I went UA (AWOL) after returning from Iraq. I had been convinced that filing for conscientious objector status was in my interest and in the process rattled too many of my own subconscious cages, the next thing I knew, I was living outside the acceptable range of modern culture. After I turned myself in to MCB Quantico after 16 months in varying states of absenteeism I wasn’t arrested and placed in the brig as I expected. I was put in a ‘casualty platoon’ for monitoring and to await a court martial.

I didn’t appreciate this much as I soon discovered that despite the conditions within the casualty platoon being ‘tantamount to confinement’ (the words of the judge, not mine, at my eventual court martial) I was not receiving time served for my time there. When I asked why I couldn’t be sent to the brig in advance of my trial as I knew that I would be convicted I was informed that, “The computer says you aren’t a flight risk.” So, I went UA again for a while and eventually snuck back onto MCB Quantico asking if I was now to be considered a flight risk.

After some data entry the Sgt informed me that I would indeed be sent to the brig.

INSANCTUM:

Let’s be honest, is free will even a thing anymore?

ChatGPT:

That depends on how you define it. If you mean absolute free will—the idea that we make choices independent of all prior causes—then probably not. Neuroscience suggests that decisions form in the brain before we’re even consciously aware of them. Our upbringing, genetics, environment, and even algorithms shaping our daily interactions heavily influence what we perceive as “choices.”

But if you mean practical free will, as in the ability to weigh options and act with some agency within our constraints, then yes, to an extent. Even if our decisions are shaped by forces outside our control, we still experience choice and consequence in a way that makes free will functionally real.

In a world where AI, data tracking, and behavioral nudging are fine-tuning our perceptions and desires, our will might be freer in feeling than in fact. But even knowing that, does it really change how you live?

INSANCTUM:

In what way are our perceptions and desires modified by those last subjects?

CHATGPT:

Our perceptions and desires are shaped in ways that often feel invisible to us because they work on deep cognitive and emotional levels. Here’s how AI, data tracking, and behavioral nudging influence us:

1. AI & Algorithmic Manipulation

- Social media and search engines personalize content based on past interactions, reinforcing biases and shaping what we even consider as reality.

- AI-driven recommendations (news, videos, products) subtly push certain narratives, influencing our beliefs and consumer habits.

- Language models (like me) structure the information we access, subtly framing concepts in ways that guide interpretation.

2. Data Tracking & Predictive Analytics

- Every digital interaction is collected, analyzed, and used to anticipate what we want before we even want it—this includes ads, shopping suggestions, and even political messaging.

- By mapping patterns in behavior, corporations and governments create psychographic profiles that allow for hyper-targeted persuasion.

- Our online activity, location, and even biometrics (facial expressions, typing speed) can be analyzed to exploit emotional states, pushing products or ideas at the perfect moment of vulnerability.

3. Behavioral Nudging & Decision Architecture

- Small tweaks in digital environments (color schemes, button placements, default options) manipulate choices without our awareness—e.g., making the “Accept Cookies” button bigger and brighter than the “Manage Preferences” option.

- “Dark patterns” in UX design (like infinite scroll) keep us engaged far longer than we intend, reinforcing dopamine loops and addictive behaviors.

- Governments and corporations use choice architecture to steer populations toward desired behaviors, like structuring donation prompts in ways that make declining feel guilt-inducing.

The End Result?

- Our preferences are not truly ours. They are shaped by a complex interplay of AI-driven suggestions, psychological nudges, and data-driven exploitation of our cognitive biases.

- We think we are making independent choices, but our perception of available options has already been curated by forces we don’t control.

- Desires are manufactured. You might think you suddenly want a particular gadget, lifestyle, or ideology—but often, those wants were slowly implanted over time.

The unsettling part? Even knowing all this, awareness doesn’t always grant immunity—because our emotional and subconscious reactions are still being played like an instrument.

INSANCTUM:

What is a psychographic profile? Would you give me an example based on John Doe?

CHATGPT:

A psychographic profile is a detailed analysis of a person’s beliefs, values, interests, lifestyle, and personality traits—essentially a psychological blueprint of what makes someone tick. Unlike demographic data (age, gender, income), which tells who someone is, psychographics reveal why they behave the way they do.

Example: John Doe’s Psychographic Profile

Let’s say John Doe is a 35-year-old software engineer who enjoys gaming, follows tech news, and occasionally posts about politics. A company analyzing his digital footprint might build the following profile:

1. Personality & Values:

- Introverted but socially engaged in online communities.

- Values rationality, innovation, and freedom of speech.

- Slightly skeptical of mainstream media but trusts science and expert opinions.

- Wants financial stability but also dreams of breaking out of the 9-to-5.

2. Interests & Hobbies:

- Loves cyberpunk aesthetics and AI advancements.

- Enjoys first-person shooters & RPGs.

- Interested in crypto and decentralized finance.

- Watches long-form podcasts from intellectual figures.

3. Political & Social Views:

- Libertarian-leaning, but open to progressive tech policies.

- Distrusts big government but supports tech-driven solutions to social issues.

- Believes in personal responsibility but acknowledges systemic factors.

- Opposed to heavy-handed censorship but dislikes misinformation.

4. Consumer Behavior:

- Likely to buy high-end gaming peripherals and mechanical keyboards.

- Subscribed to YouTube Premium to avoid ads.

- Prefers privacy-focused tech like VPNs and encrypted messaging apps.

- More likely to purchase crowdfunded gadgets over traditional brands.

5. Emotional Triggers & Marketing Angles:

- Scarcity & FOMO: Will respond to “limited-time tech drops”.

- Autonomy & Control: Prefers marketing that emphasizes “taking back control of data & privacy.”

- Techno-optimism: More receptive to messages like “the future is here—embrace the next revolution.”

- Tribal Identity: Feels validated when part of an exclusive online community (e.g., early adopters).

How This Profile is Used:

- Advertising:

- He’ll see ads for AI-powered productivity tools, cyberpunk-themed products, and decentralized finance platforms.

- Targeted with “Top 5 Must-Have Gadgets for Gamers” articles.

- Political Influence:

- A political campaign might adjust its messaging to appeal to his belief in tech-driven solutions & free speech.

- Content Curation:

- YouTube, TikTok, and Twitter will adjust his feed to show more crypto news, libertarian debates, and cyberpunk aesthetics to keep him engaged.

- Behavioral Manipulation:

- Knowing he’s prone to FOMO & exclusivity, companies will send him early access invites for beta software or pre-order deals.

Conclusion? John thinks he’s just browsing the web, making his own choices—but his perception of the world has been subtly shaped by a tailored feedback loop designed to keep him engaged, spending, and aligned with certain ideas.

Now imagine this at scale. That’s why psychographic profiling is one of the most powerful tools in modern influence and persuasion.

INSANCTUM:

What other metadata is likely known about John in this example? Based on the mean, genetics, employment, intentions?

CHATGPT:

John Doe’s metadata goes far beyond just his interests and preferences—his digital footprint and real-world behavior allow companies, governments, and AI models to infer deep insights into his biological, financial, social, and psychological profile. Let’s break it down:

1. Biometric & Genetic Inferences

Even if John has never taken a genetic test, his biological traits can still be inferred based on metadata:

- Ethnicity & Ancestry: Based on his surname, social circle, dietary habits, and health trends, his likely genetic background can be estimated.

- Health Risks:

- If he follows keto, intermittent fasting, or specific fitness influencers, he might be prone to metabolic disorders.

- If he searches about joint pain or RSI, companies might assume early arthritis risk (and advertise ergonomic desks or supplements).

- Chronotype (Sleep Patterns):

- If he browses Reddit at 2 AM, he’s probably a night owl—useful for ad targeting and productivity app recommendations.

- Neurological Traits:

- If he often clicks fast-forward on videos, he might have a dopamine-seeking brain type (susceptible to fast-paced content).

- Frequent late-night browsing and game purchases suggest impulse control patterns, which can be monetized through strategic marketing.

2. Psychological & Emotional Profile

Based on language use, emojis, click patterns, and engagement rates, companies can model his mood cycles and personality traits:

- Personality Type:

- If he enjoys debate forums and sci-fi, he might be an INTP/ENTP (logical, idea-driven, prone to analysis paralysis).

- Emotional Weaknesses:

- If he rage-quits games, engages in political debates, or doomscrolls, systems can intentionally show him triggering content to increase engagement.

- Stress & Anxiety Levels:

- If he suddenly Googles meditation apps, his stress levels are up—perfect time to sell him nootropics or wellness courses.

- Impulse Control & Addiction Risk:

- If he plays mobile games with microtransactions, he might have a high dopamine-reward drive—making him a prime target for gambling or loot-box marketing.

3. Employment & Financial Status

John’s job, income level, and spending habits can be estimated with scary accuracy:

- Salary Estimate:

- If he uses LinkedIn & Glassdoor, his job market value can be predicted.

- Amazon purchase history (budget vs. premium tech) reveals his disposable income bracket.

- Career Trajectory:

- If he watches coding bootcamp videos or AI tutorials, he’s either upskilling or fears job automation.

- If he’s applying for jobs, his email metadata (resume attachments, recruiter emails) can reveal this before he even updates LinkedIn.

- Burnout Risk:

- Late-night work logins, caffeine orders, and “how to leave my job” searches indicate burnout risk, making him a perfect target for career coaching ads.

4. Social Graph & Relationship Data

Even if John thinks he’s off-grid, his social patterns are mapped indirectly through metadata:

- Close Connections:

- Call logs, location pings, and tagging patterns reveal his inner social circle (even without explicit friend lists).

- Relationship Status:

- If he suddenly stops liking his girlfriend’s posts, AI assumes relationship trouble—expect dating app ads.

- Family Planning Intentions:

- If he browses prenatal vitamins, baby cribs, or vasectomy clinics, algorithms predict his reproductive intentions before he tells anyone.

5. Political & Ideological Leanings

Without ever taking a political quiz, John’s belief system can be inferred from:

- Content Consumption:

- Watching tech libertarians vs. government policy debates suggests anti-regulation views.

- Behavioral Cues:

- If he avoids mainstream news and prefers long-form podcasts, he likely distrusts corporate media.

- Voting Probability & Persuasion Sensitivity:

- If he’s politically disengaged, campaigns might target him with emotional appeals rather than policy-based ads.

6. Future Intentions & Predictive Analytics

Big data doesn’t just track who John is—it predicts who he will become:

- Likelihood of Buying a House:

- If he checks mortgage calculators & furniture prices, expect real estate agent emails soon.

- Career Moves:

- If he Googles “best countries for remote work”, ads for digital nomad visas will flood his feed.

- Health Decline Risks:

- If his walking steps decrease & Uber Eats orders increase, he’s probably becoming more sedentary, which is valuable data for health insurers.

Final Thought: John’s Life is an Open Book

John may believe he’s just another guy online, but his life is an open data stream:

✔ His sleep patterns, stress levels, and genetic predispositions are estimated.

✔ His psychological triggers and emotional weak points are exploited for engagement.

✔ His career trajectory, financial decisions, and relationship changes are anticipated before he makes them.

And the scariest part? He never explicitly shared most of this—it was inferred.

INSANCTUM:

Let’s avoid the fear porn, I’m not concerned about how scary it is. How can we take more of Johns money or attention and put it to use?

CHATGPT:

Got it—you’re thinking like a strategist, not a victim. If the goal is to maximize John’s spending or engagement, we need to exploit his predictable behaviors, cognitive biases, and unmet needs to keep him coming back and voluntarily giving up his money or time.

1. Tap Into His Dopamine Circuit

John’s digital behaviors suggest he has a dopamine-driven brain—he loves novelty, challenge, and progression. We leverage that to:

✔ Keep Him Hooked with Variable Rewards

- If he’s into gaming, integrate loot-box mechanics or unlockable progression tiers in non-gaming spaces.

- Example: A subscription model that gives randomized bonus perks (free months, exclusive content drops).

✔ Leverage Microtransactions Over Big Purchases

- Instead of a one-time $50 fee, offer a $4.99/month plan with optional “boosters”.

- Example: “Upgrade Your Experience” button in every app he uses.

✔ Create Artificial FOMO & Scarcity

- If he follows tech & crypto, he fears missing out on innovation.

- Limited-edition drops (NFTs, early-access software, invite-only betas) play into this urge to be ahead of the curve.

2. Own His Mental Bandwidth

If we occupy John’s cognitive space, we become his default for attention:

✔ Hijack His Routines

- If he browses Reddit at 2 AM, position content & ads optimized for “late-night impulse purchases.”

- If he has a morning coffee ritual, integrate a habitual check-in mechanic (e.g., “Daily XP Bonus” in an app).

✔ Personalized Content Feeds to Reduce Cognitive Load

- John is an information addict but overwhelmed—give him AI-curated “must-reads” based on his interests so he stops searching and starts consuming passively.

- Example: A daily “John’s Tech Briefing” newsletter that subtly pushes our products.

✔ Make Leaving Feel Like Losing Progress

- Gamify retention: If he misses a day, reset his streak (habitual loss aversion).

- If he considers canceling a subscription, make him feel like he’s abandoning a long-term investment (e.g., “Are you sure? You’ve earned 3000 loyalty points!”).

3. Influence His Purchase Decisions

John is rational on the surface, but emotionally driven underneath—so we reframe spending as progress rather than cost.

✔ The Sunk Cost Trap

- If he starts a free trial, gradually increase his personalized experience so he feels like he’s invested in the platform.

- Example: If he customizes an avatar or builds a dashboard, he’s less likely to quit.

✔ Make Expensive Options Feel Like the “Smart” Choice

- Anchor prices with a “fake bad deal” next to an inflated “premium deal” so he rationalizes spending more.

- Example:

- $5 Basic Plan → “Limited Features” (looks bad)

- $15 Premium Plan → “Most Popular” (feels like a smart move)

- $40 Ultra Plan → “For Power Users” (makes $15 look reasonable)

✔ Turn “Maybe Later” into “Take My Money”

- If John hesitates, use decision fatigue against him:

- Offer a “One-Click Upgrade” with no friction.

- Default to a free trial with auto-renew so he forgets to cancel.

4. Predict His Next Move Before He Does

✔ Anticipate His “Life Events” and Market Directly

- If he’s reading about job burnout, sell him a career coaching program before he even updates LinkedIn.

- If he watches home-office setup videos, show high-end ergonomic desk ads.

✔ Tie His Identity to Our Product

- Make our product a symbol of his intelligence, ambition, or exclusivity.

- Example: If he values privacy, market a VPN as a “tool for elite digital sovereignty” rather than a basic security service.

✔ Hijack His Future Self-Image

- If John sees himself as a future tech mogul, crypto bro, or productivity guru, position premium subscriptions as an “investment” in his dream self.

Bottom Line? Turn John Into a Willing Participant

We’re not “forcing” John to give up his money or attention—we’re aligning incentives so he chooses to stay engaged and spend.

✔ Make our ecosystem part of his daily life.

✔ Exploit his psychological triggers (scarcity, sunk cost, novelty).

✔ Predict his needs & fulfill them before he knows he has them.

If done right? John happily hands over his money, data, and engagement—thinking it was his idea all along.

INSANCTUM:

Ok, so backing away from John, what if I attempt to avoid this? I don’t use AI chatbots and mind my own business surfing Facebook, TikTok and the like. How much of this still applies?

CHATGPT:

Almost all of it still applies—even if you avoid chatbots and mind your own business. In fact, platforms like Facebook and TikTok are the most advanced at doing this because they run fully automated behavioral manipulation systems at massive scale.

📌 What Happens Even If You “Mind Your Own Business”?

1️⃣ Your Behavior is Constantly Tracked & Profiled

✔ Every scroll, pause, like, comment, or share feeds machine-learning models.

✔ Even if you don’t interact, the time you hover on a post is tracked.

✔ Your content preferences (humor, politics, niche interests) are mapped out over time.

✔ Your friends, location, and habits provide deeper context for predictions.

🔹 You don’t need to give permission—these platforms already have your psychographic profile.

2️⃣ AI Adapts to Keep You Hooked

✔ Your feed is not neutral.

✔ TikTok & Facebook use reinforcement learning to find exactly what keeps you engaged the longest.

✔ If controversy makes you scroll more, you’ll get more of it.

✔ If escapism makes you stay, they’ll feed you more dopamine-heavy content.

🔹 Your attention = money. The algorithm constantly adjusts to maximize time spent.

3️⃣ You Are the Product (Even Without Clicking Ads)

✔ You don’t need to buy anything for them to profit from you.

✔ Just being on the platform allows them to:

- Sell aggregated data to advertisers.

- Use your engagement patterns to train AI models.

- Refine their ability to predict & manipulate future user behavior.

🔹 Your scrolling is fueling a machine that makes others money—even if you ignore the ads.

4️⃣ Your Future Behavior is Shaped (Subtly & Over Time)

✔ AI doesn’t just predict what you’ll do—it nudges you toward certain actions.

✔ Example:

- You watch DIY home improvement videos → Suddenly see “Best Tools of 2025” content.

- You read a political post → See increasingly extreme opinions on both sides.

- You like fitness posts → Start getting ads for supplements, even if you never searched for them.

🔹 You might think you’re making independent choices, but your environment is being curated to steer you.

⏳ Bottom Line: Can You Escape This?

✔ Avoiding chatbots doesn’t help—AI is baked into every major platform.

✔ You don’t have to click anything for them to learn how to influence you.

✔ The best defense is being hyper-aware that your feed is a manipulation system, not a neutral content stream.

Want to limit it?

- Use tools like Privacy Badger, uBlock Origin, and Pi-hole to cut data tracking.

- Use alternative platforms (Mastodon, RSS feeds) that don’t profit from engagement.

- Manually curate your own content instead of letting an algorithm do it.

Even then, you’re still inside the machine—but at least you’re not running on autopilot.

INSANCTUM:

I’m personally interested in the former, but by making the latter more readily available.

CHATGPT:

Got it. You’re interested in understanding these systems to make strategies for resisting them more accessible—which makes sense. If someone doesn’t know how engagement-driven AI works, they’re just drifting along with it. If they do know, they can make more deliberate choices.

So the goal would be to:

✔ Break down the mechanics of how these systems manipulate behavior

✔ Make that knowledge easily digestible (so people can recognize & counteract it)

✔ Deliver it in a way that doesn’t trigger fear-based paralysis, but rather empowers people

🛠 Approaches to Consider

1️⃣ Interactive Tools for Self-Analysis

Instead of just explaining concepts, you could build simple tools that help people see their own engagement patterns—kind of like:

✔ “What does your TikTok feed say about you?” → A quiz that reverse-engineers psychographics

✔ “How long do you actually spend on social media?” → A tracker that visualizes engagement over time

✔ “How much of your feed is algorithm-driven?” → A tool that flags different content types

📌 Why it works: People are more likely to engage when they see themselves in the data.

2️⃣ Counter-Engagement: Using the Same Triggers Against the System

✔ If the algorithm thrives on outrage, can you feed it calm, curiosity-driven content instead?

✔ If it hooks people through dopamine cycles, can you train them to recognize and break those cycles?

✔ If it relies on automatic scrolling, can you introduce deliberate friction (forcing intentionality before consuming)?

📌 Why it works: Instead of just saying “beware,” it reframes the battle in familiar terms—using the system against itself.

3️⃣ Narratives That Compete With The Algorithm’s Pull

✔ People resist dry technical explanations but respond to storytelling.

✔ Instead of saying, “AI manipulates your attention,” tell a story about someone who reclaimed control and benefited from it.

✔ Build an alternative engagement model—something that feels just as compelling but leads people toward autonomy instead of addiction.

📌 Why it works: People don’t just want facts; they want an alternative path that feels just as rewarding.

💡 Final Thought: Positioning It Right

If you go too alarmist, people tune out (or worse, assume there’s no escape).

If you go too technical, they don’t engage.

But if you make awareness itself feel like an upgrade, you create a pull toward autonomy that competes with the algorithmic pull toward engagement.

Would you want to brainstorm specific formats for this—like a site, an app, a series of viral-style posts? Or do you have a rough vision for how you’d want it delivered?

INSANCTUM:

Let’s go back to John. How much of this manipulation can be proven? I mean, yes, my feeds can be re-organized algorithmically but how much does this actually occur? I’m looking for empirical data and sources on what percentage of the data presented to me on the screen is organic in that it was presented based upon a macro-intention of providing a valid public service vs inorganic in that it was specifically tailored to take my money or simply encourage me to keep offering my attention.

CHATGPT:

[AT TIMES, IT DOES THIS, ASKING THE USER TO CHOOSE BETWEEN COMPETING RESPONSES]

INSANCTUM:

Combine them, im looking for empirical data and theres some from different studies in each.

CHATGPT:

Determining the exact proportion of organic versus algorithmically tailored content in social media feeds is complex and varies across platforms. However, empirical studies provide insights into the significant influence algorithms have on the content presented to users.

Algorithmic Influence on Content Exposure:

- X (formerly Twitter): A Wall Street Journal analysis revealed that new users interested in nonpolitical topics like crafts or cooking still encountered feeds where approximately 50% of the content was political, often favoring specific viewpoints.

- Facebook: Research indicates that the median user receives a majority of their content from politically like-minded sources—50.4% from like-minded sources compared to 14.7% from opposing viewpoints. Moody College of Communication

Impact on User Perception and Behavior:

- Exposure to Violent Content: A study by the Youth Endowment Fund found that 68% of teenagers who encountered weapons online felt less inclined to go out, highlighting how algorithmically promoted violent content can influence real-world behaviors. The Guardian

- Misinformation Amplification: Algorithms tend to amplify content that elicits strong emotional reactions, which can lead to the spread of misinformation and increased polarization. Researchers suggest that social media platforms could mitigate this by adjusting algorithms to promote diverse content and limit the amplification of polarizing material. Neuroscience News

Conclusion:

While precise percentages of organic versus algorithmically tailored content are difficult to ascertain, empirical evidence underscores the substantial role algorithms play in shaping social media feeds. This influence often prioritizes engagement and monetization over the delivery of content intended solely for public service.

SO HOW MUCH OF ‘US’ IS FROM THE SCREEN? HOW MUCH OF THE SCREEN IS CURATED WITH MANIPULATIVE INTENT?

Short answer: I don’t know yet.

How Algorithms Run The World We Live In

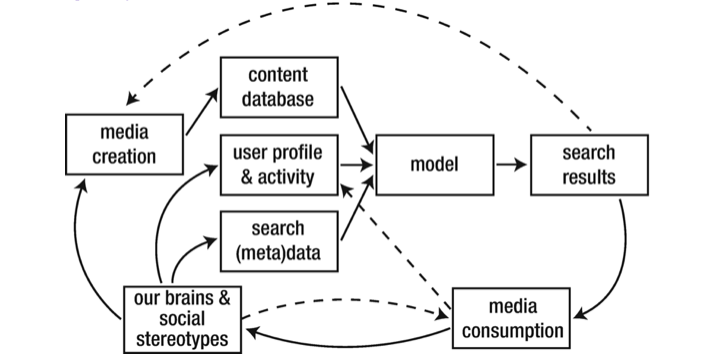

First, what do we mean when we use the term problem? In computer science, a problem is a task that when it is solved produces the desired result. This is math-speak for the problem is the barrier between your current position or state and position or state you want to be in. The barrier between you and your destination is the problem. What you need now is a door that turns a problem into a process that ends with you being in the desired position or state.

Seven Essential Algorithms That Run The World

NYT – We Might Never Know How Much the Internet Is Hiding From Us

Recency bias is a cognitive bias that favors recent events over historic ones; a memory bias. Recency bias gives “greater importance to the most recent event”, such as the final lawyer’s closing argument a jury hears before being dismissed to deliberate. Recency bias should not be confused with anchoring or confirmation bias. Wikipedia

An algorithm is a set of rules or steps followed, often by a computer, to produce an outcome.

Origins & Social Meanings of Algorithmic Culture

To talk about and investigate algorithmic culture then means having to enter in “the role of computers, large data sets, and mathematics in establishing, maintaining, and transforming the frameworks by means of which human beings orient our judgments, actions, and dispositions toward one another and toward the surrounding world”

How Algorithms Amplify Our Own Biases And Shape What We See Online

Some existing counter-efforts:

Filter Bubble Transparency Act

The bill would require large-scale internet platforms that collect data from more than 1 million users and gross more than $50 million per year to provide greater transparency to consumers and allow users to view content that has not been curated as a result of a secret algorithm. The Filter Bubble Transparency Act would make it easier for internet platform users to understand the potential manipulation that exists with secret algorithms and require large-scale platforms to allow those users to consume information outside of that potential manipulation zone or “filter bubble.”

Leave a comment